AI is gradually taking over every industry, and this has raised a lot of concerns about privacy, safety and security. Microsoft has shown commitment towards developing AI technology that leaves regard for the privacy and security of users

Trustworthy AI refers to a set of best practices defined at Microsoft for building AI that is secure, private and safe. Microsoft is creating a world where you can trust the decisions your AI systems make. Sounds great, right? In today’s fast-changing tech world, where AI powers almost everything, building trust is crucial. Without it, we risk creating biased, unreliable systems that can cause harm.

Let’s get into the details of Trustworthy AI.

Microsoft’s Responsible AI Principles

Microsoft believes AI should serve everyone fairly and safely. To make that happen, they developed Responsible AI principles. These principles guide their AI journey to make sure their systems are fair, secure, and inclusive.

Imagine using an AI system to process loan applications. If that system unfairly rejects certain groups, it’s not doing its job right. Microsoft’s fairness principle ensures this doesn’t happen.

Secure Future Initiative and Responsible AI Transparency Report

Microsoft backs up its words with actions. The Secure Future Initiative is one example. This initiative focuses on creating AI systems that are secure and trusted, especially in critical sectors like healthcare and finance.

They also release a Responsible AI Transparency Report, which is all about keeping things open. Microsoft shares what they’re doing to make AI responsible, the challenges they face, and how they solve them. Transparency is key here, as it builds trust between Microsoft, their customers, and the community.

Core Principles of Microsoft Trustworthy AI

1. Ensuring Fairness and Equal Treatment

Microsoft’s AI treats everyone equally. Their systems are designed to avoid bias, ensuring no group is left behind. They constantly test and update their models to remove any signs of discrimination.

2. Reliability and Safety

You need AI that works every time, under any condition. Microsoft makes sure their AI systems perform consistently, even in tough situations. Regular testing and real-world simulations ensure their AI solutions are reliable and safe.

For instance, in healthcare, where lives are at stake, AI must be reliable. Microsoft’s AI tools, used in diagnostics, have gone through rigorous testing to ensure they deliver consistent results.

3. Protecting User Data and Maintaining Confidentiality

Keeping your data private and secure is a top priority. Microsoft ensures that all AI systems protect sensitive data. They comply with laws like the GDPR to keep your information safe from breaches.

4. Engaging Diverse User Groups

Microsoft believes that AI should benefit everyone. They design AI with features that help people of all backgrounds, abilities, and cultures. Their Seeing AI app is a great example. It helps visually impaired users better interact with the world by describing people, objects, and text in real-time.

5. Clear Understanding of AI Processes

Understanding how AI makes decisions is crucial. Microsoft offers tools that help you see and understand the decision-making process behind their AI models. This transparency helps you trust the technology.

6. Accountability

Microsoft is accountable for the AI built. If something goes wrong, they take responsibility. They’ve put systems in place to trace issues back to their source and fix them quickly.

Four-Step Iterative Framework for Ethical AI Deployment

Microsoft follows a practical framework to ensure responsible AI development, helping developers identify and manage risks effectively.

1. Identify

First, you recognize and prioritize potential harms that your AI might cause. You conduct thorough assessments and test AI models to detect issues like bias, privacy violations, or security threats. This helps you focus on the most critical risks.

2. Measure

Next, you establish metrics to evaluate how often these harms occur and how severe they are. Microsoft provides tools that help you measure the frequency and impact of identified risks, ensuring they’re properly assessed.

3. Mitigate

After measuring the risks, you implement strategies to reduce or eliminate them. You refine your AI models or introduce new features that address these risks. Afterward, you reassess the system to ensure the changes effectively reduce the harm.

4. Operate

Finally, you execute a deployment plan to ensure the AI runs smoothly and meets all ethical standards. This phase ensures that your AI is fully prepared and compliant before going live.

By following this framework, you can develop AI systems that are reliable and trustworthy, aligning with Microsoft’s ethical standards.

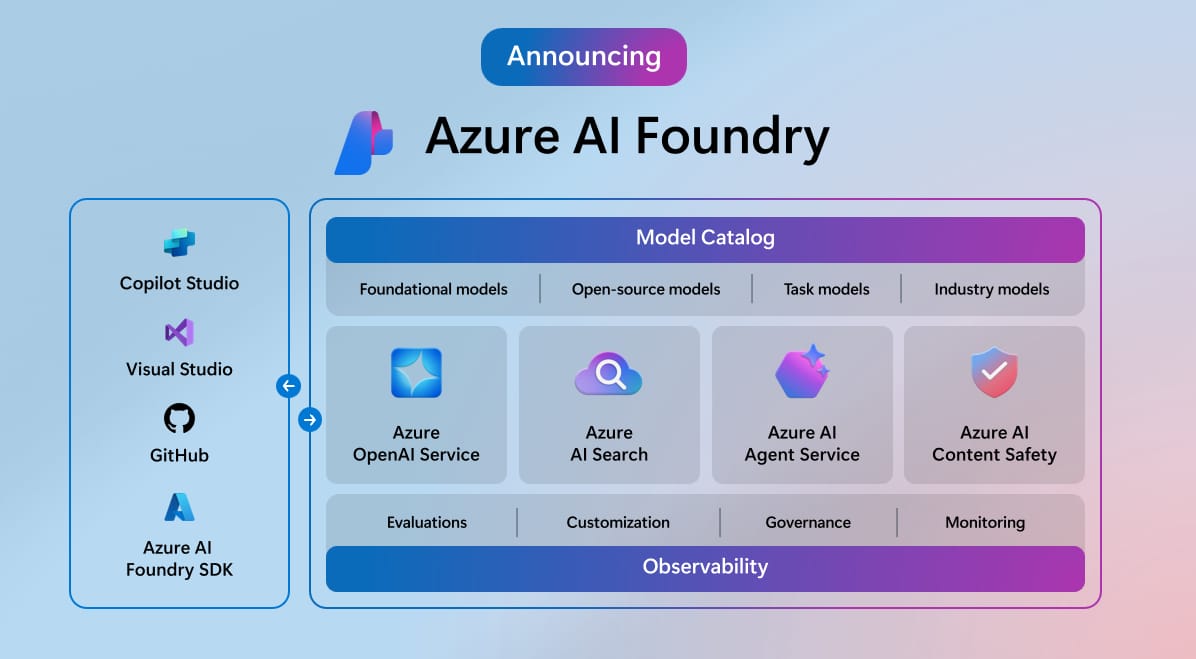

Azure AI Capabilities Supporting Trustworthy AI

Microsoft Azure AI offers a wide range of tools that help you build AI systems you can trust. These tools don’t just make development easier; they actively address risks and improve security throughout your AI projects.

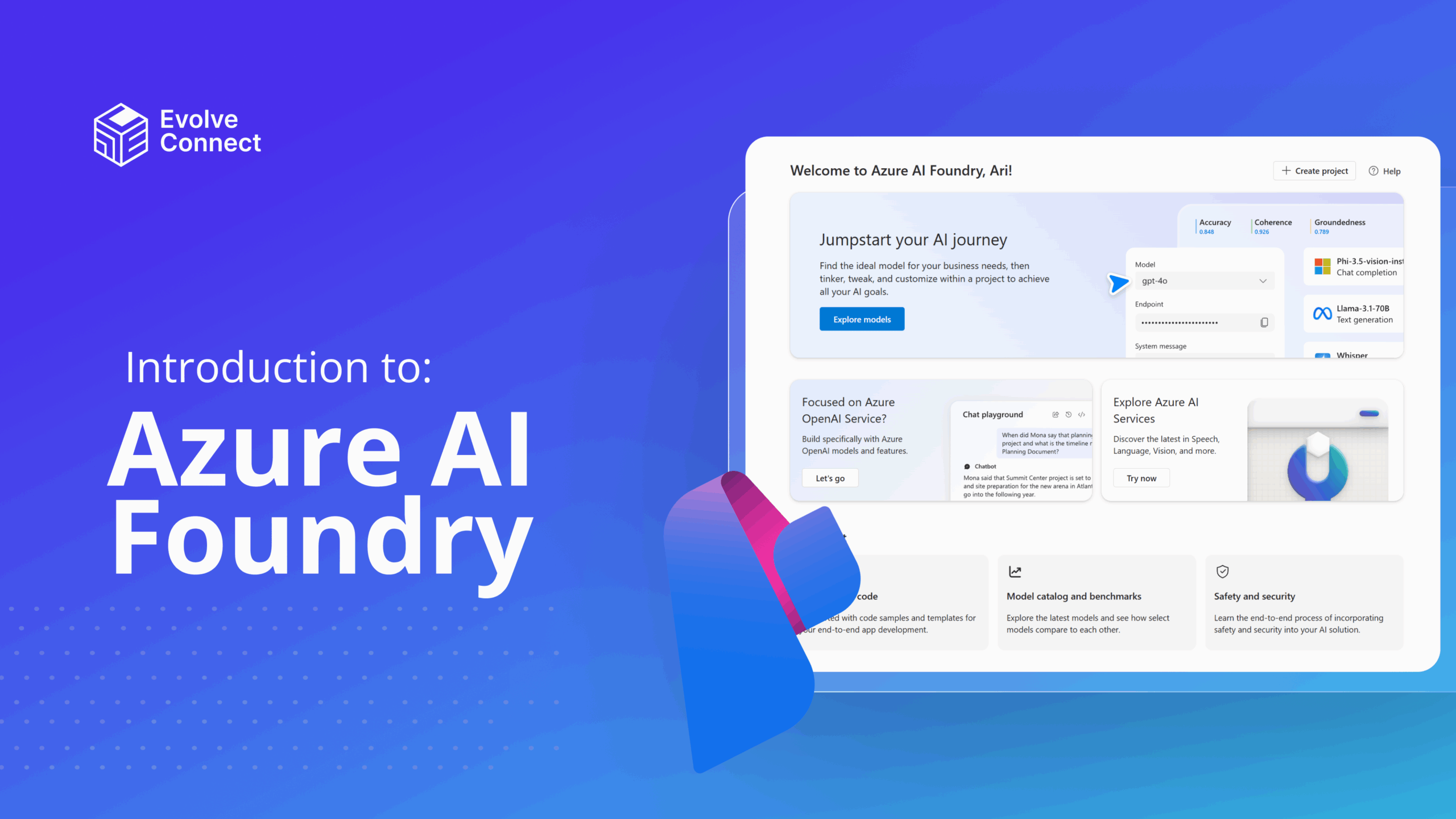

1. Azure AI Studio Evaluations

You can run proactive risk assessments to spot potential issues early with Azure AI studio. This helps you identify problems like bias or model errors before they get out of control. Think of it as a pre-check before you deploy your AI. For example, if you’re building an AI to analyze loan applications, Azure AI Studio helps you ensure that all applicants, regardless of background, are treated fairly.

2. Correction Capability in Azure AI Content Safety

AI can make mistakes. It can generate responses that are inaccurate or irrelevant. This is called a hallucination in the AI world. The Azure AI Content Safety tool includes a real-time correction feature that catches these hallucinations and fixes them immediately. So, if you’re using AI to provide customer support, this tool ensures that the answers your system gives are accurate and relevant.

3. Confidential Inferencing for Secure Data Processing

Security is a major concern when handling sensitive data. With Confidential Inferencing in Azure AI, you can keep user data safe while your AI models make predictions. This means you can process private information, like medical records or financial details, without worrying about data leaks. The system uses encryption to protect data during the entire AI process, ensuring security without sacrificing performance.

You can build AI systems that are not only effective but also secure, fair, and responsible using these tools. These features help you follow Microsoft’s standards for Trustworthy AI while giving you the confidence to deploy your AI models in real-world situations.

Responsible AI Dashboard Features and Functionalities

Microsoft provides the Responsible AI Dashboard, a one-stop tool for developers. This dashboard helps you track your AI’s performance, check for bias, and understand how your AI models make decisions. It’s an essential tool to keep your AI systems aligned with ethical standards. These functionalities include error analysis, model debugging, fairness assessment, and interactivity.

Conclusion

Microsoft is leading in creating AI you can trust. By focusing on fairness, transparency, and accountability, they ensure that AI systems benefit everyone. They’ve laid out a clear framework and provided powerful tools to help you build trustworthy AI in your organization. Now is the time for you to embrace these practices and ensure the AI systems you create are as trustworthy as Microsoft’s.

If you want to learn more about how to implement trustworthy AI, click here for more information.